Did you include a package.json with the required packages (package.json goes alongside server-scripts folder)?

For me, the error occurred because I forgot to do just that.

My package.json:

{

"name": "foo",

"scripts": {},

"dependencies": {

"axios": "^0.21.1",

"mysql": "2.18.1"

},

"devDependencies": {}

}

You will also most likely have to manually install them because of a yarn cache issue; see here

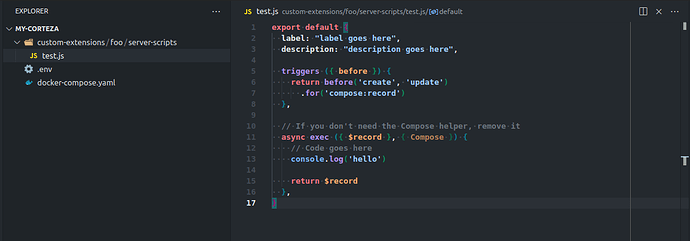

Then, for the sake of completion, here is my script that indicates it probably works (I used MySQL)

const mysql = require('mysql')

export default {

label: "label goes here",

description: "description goes here",

triggers ({ before }) {

return before('create', 'update')

.for('compose:record')

},

// If you don't need the Compose helper, remove it

async exec ({ $record }, { Compose }) {

// Code goes here

console.log('hello')

try {

var con = mysql.createConnection({

host: 'db2',

port: '3306',

user: 'dbuser',

password: 'dbpass',

database: 'dbname',

charset: 'utf8mb4_general_ci',

insecureAuth: true,

})

con.connect(function(err) {

if (err) throw err;

console.log("Connected!");

});

} catch (err) {

console.error(err)

}

return $record

},

}

As far as networking goes, if both services are on the same network (internal here), you should be able to access them just fine (see the db2 host for me).

I did, however, need to do this to make authentication pass.

I haven’t worked with databases in quite a while so I just blindly copy-pasted stuff that looked about right; for your production instance (mine is local) you’ll probably need to be more careful.